Apple Vision Pro

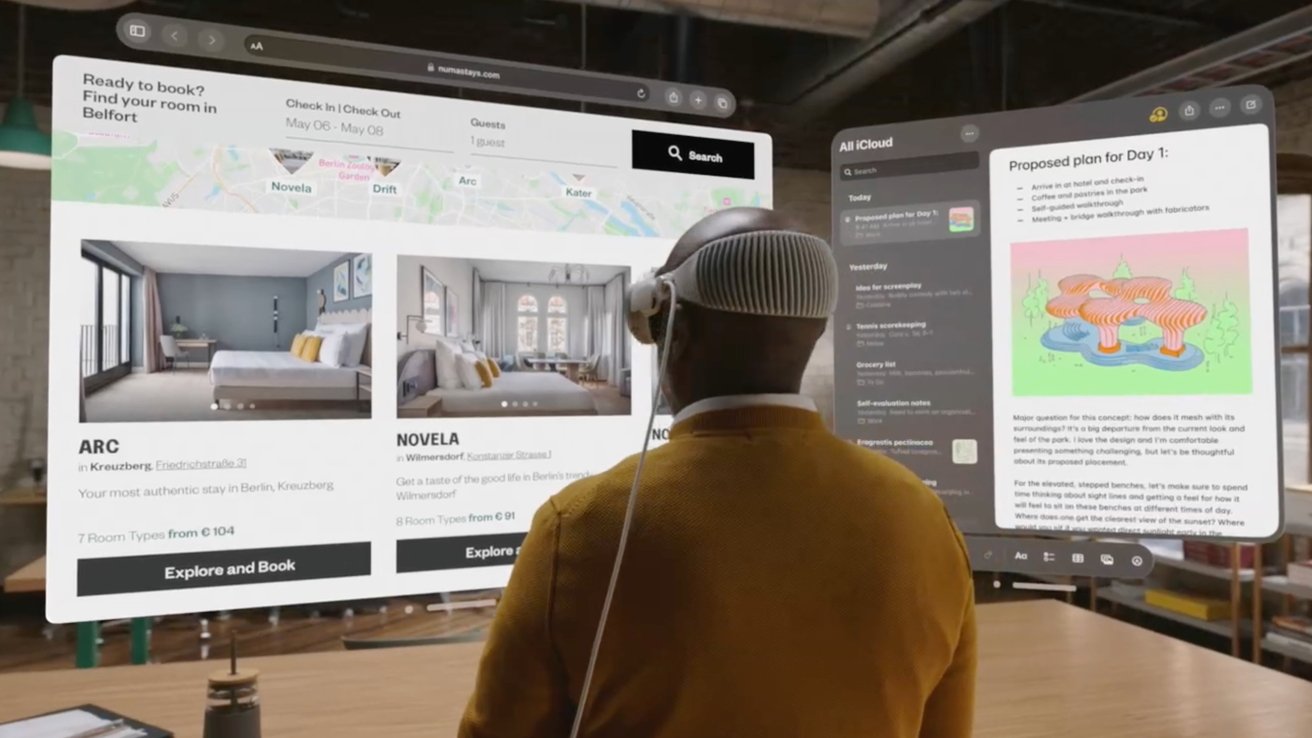

Apple Vision Pro marks Apple's entry into the realm of spatial computing, representing their inaugural device in this category. Offering users a video feed boasting approximately 4K resolution per eye, it seamlessly integrates apps and a user interface into their surroundings within a 3D space. Launched on February 2, this cutting-edge device comes with 256GB of storage and provides access to over 1 million compatible apps through the visionOS App Store.

● Toggle between AR/VR modes effortlessly using the Digital Crown.

● Portable design complemented by a 2-hour external battery for extended usage.

● Diverse accessory ecosystem available, featuring customizable straps and Light Seal.

● Enjoy stunning visual clarity with approximately 4K resolution per eye.

● Powered by advanced M2 and R1 processors for seamless performance.

After years of speculation, Apple has unveiled its highly anticipated Apple VR headset, now known as the Apple Vision Pro. Diverging from traditional VR, this cutting-edge device introduces a mixed reality experience seamlessly blending both augmented reality (AR) and virtual reality (VR) applications.

The Apple Vision Pro, equipped with the visionOS operating system, represents Apple's venture into the realm of "spatial computing." This forward-thinking approach seamlessly integrates software into the user's surroundings, utilizing video passthrough technology.

Diverging from traditional transparent goggles or glasses, the Apple Vision Pro is designed as a headset that temporarily obstructs the user's vision. Nevertheless, it incorporates high-quality cameras to capture and relay 3D video of the surrounding environment to the user, presenting it on pixel-dense screens.

Recognized as an initial stride into a groundbreaking era of computing, the Apple Vision Pro serves as a potential precursor to forthcoming innovations, including the highly anticipated Apple Glass — AR glasses. Despite its "pro" designation, this headset is envisioned as a stepping stone towards a more cost-effective Apple Vision model in the future.

Rumors are circulating about upcoming iterations, with Apple potentially planning the release of four new models, some targeting affordability. However, these advancements may not materialize until 2026.

Apple Vision Pro Design

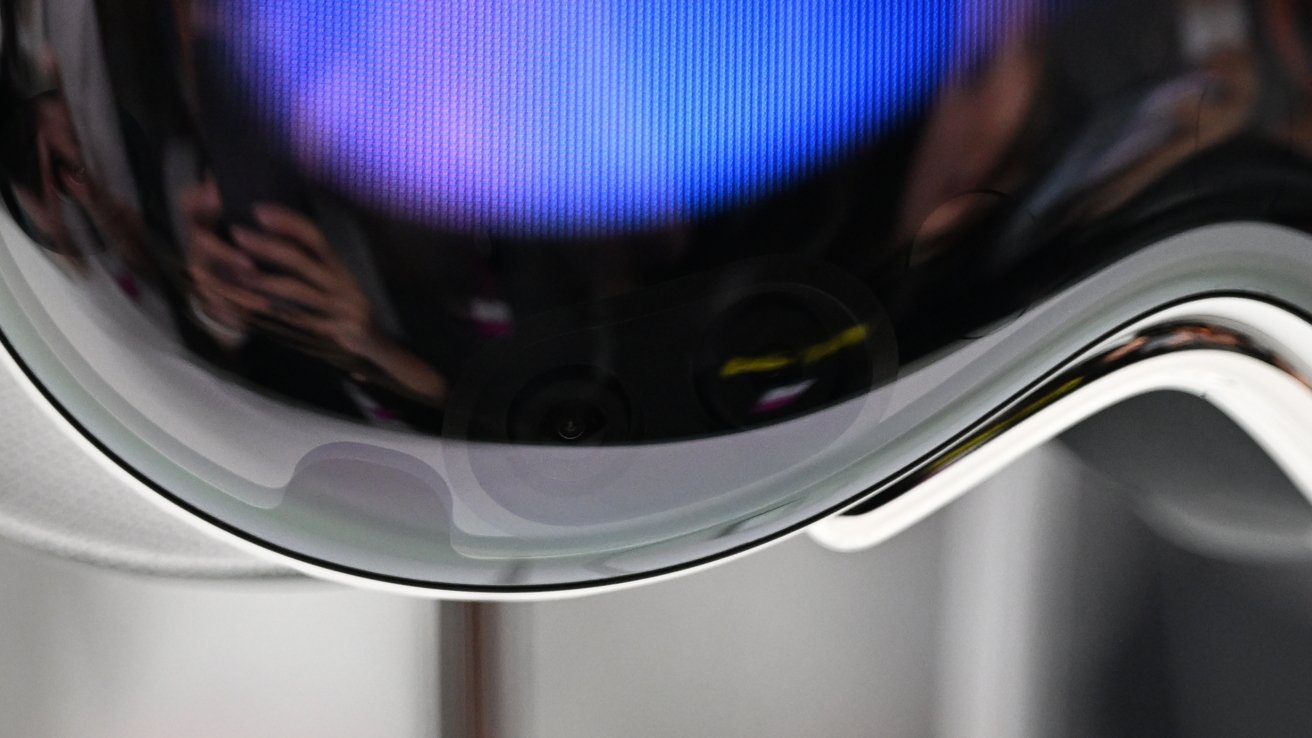

The final product adopts the ski goggle-like design that had been rumored since early 2021. Featuring a curved glass lens housing multiple cameras and an aluminum exterior case, the design embodies a harmonious blend of functionality and aesthetics.

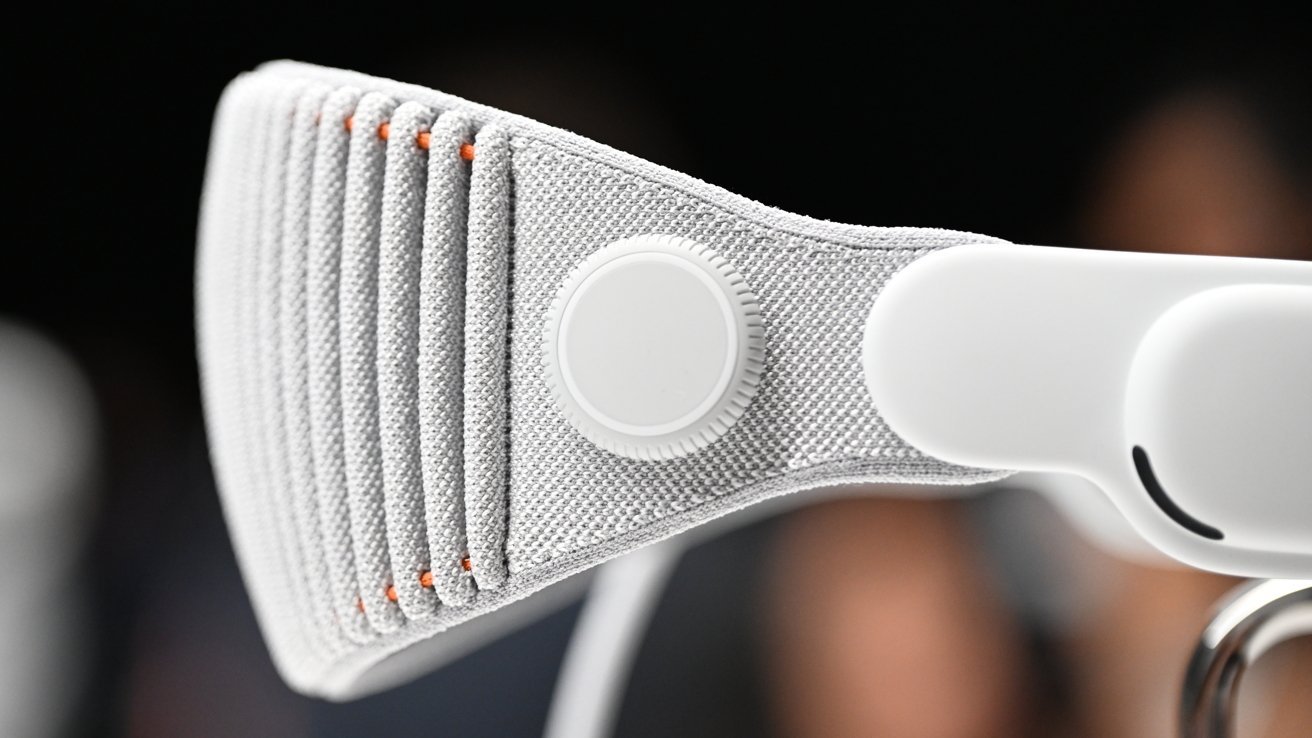

The top features a Digital Crown that governs AR/VR immersion, offering users control over their experience. The Solo Knit Band, crafted as a single 3D-knitted piece, ensures easy adjustment with a fit dial and convenient attachment using a pull-tab for effortless removal. While providing a snug fit, it strategically places most of the headset's weight on the wearer's face.

The form-fitting design of the headset means users cannot wear glasses, distinguishing it from the PlayStation VR 2. To address this, Apple collaborated with Zeiss, offering prescription or reader lenses separately for $150 or $100.

Embedded along the sides are small speakers known as Audio Pods, directing spatial audio precisely into the user's ears. A button on the top facilitates control over specific features such as the 3D camera, while the Digital Crown on the right functions as a Home Button and governs the overall immersive experience.

The headband of the Apple Vision Pro is adjustable and can be swapped for different fits, ensuring personalized comfort.

A proprietary mechanism facilitates the attachment of a power cable to the side, linked to an external battery pack. This configuration provides approximately 2 hours of portable use, with the flexibility for users to connect the battery for continuous power.

It's worth noting that if the battery depletes, the entire headset shuts down, lacking a backup power option. Upon shutdown, the headset forgets window placement, necessitating a reset.

The front glass doubles as an external display for a feature named eyeSight. This feature allows users to indicate their attentiveness through a unique visual effect.

EyeSight on the Apple Vision Pro showcases a user's eyes on the external display. When fully immersed and unable to see the outside world, a vibrant waveform appears. In the case of active passthrough, the user's eyes become visible through a lenticular display. The external display remains blank if the user isn't looking at it.

The design of eyeSight aims to instill confidence in users, allowing them to use the headset in various environments. However, the widespread adoption of such technology may face social challenges that need to be addressed.

Notably, as eyeSight relies on a user's Persona, it encounters the uncanny valley effect. Given that Personas are still in beta, occasional odd bugs may surface, leading to instances where users' eyes appear shut or distorted.

Apple Vision Pro technology

The Apple Vision Pro operates as a standalone device, powered by an M2 processor and R1 co-processor. This dual-chip design facilitates immersive spatial experiences, with the M2 handling visionOS and graphics processing, while the dedicated R1 manages camera input, sensors, and microphones.

The M2 and R1 processors seamlessly collaborate, ensuring optimal performance. As indicated by a reference in Xcode, the Apple Vision Pro is equipped with 16GB of RAM, mirroring the capacity in developer kits. Customers have the flexibility to configure their devices with 256GB, 512GB, or 1TB of storage.

With a minimal 12-millisecond lag from camera to display, users are unlikely to perceive any delay. While passthrough functionality excels in well-lit environments, it is executed with precision to the extent that depth perception remains unaffected.

The inclusion of an eye-tracking system, comprising LEDs and infrared cameras, allows for precise controls through simple gaze. Users can effortlessly glance at a text input field and then use voice commands to populate it.

The Apple Vision Pro employs the glass cover as a lens for its 12 cameras, five sensors, and six microphones, all housed within the aluminum and glass enclosure. This configuration contributes to the product's slightly higher weight compared to other VR headsets in the market.

Two high-resolution cameras collectively transmit over one billion pixels per second to the displays, each boasting a resolution of 3,660 pixels by 3,200 pixels (around 3,380 pixels per inch). The display system incorporates a micro-OLED backplane with pixels measuring seven and a half microns wide. This design enables the placement of approximately 50 pixels in the same space as a single iPhone pixel. To enhance the user's visual experience, a custom three-element lens is employed, magnifying the screen and providing a wide field of vision.

The integration of LiDAR and a TrueDepth camera collaborates to generate a fused 3D map of the environment. Infrared flood illuminators, in conjunction with other sensors, enhance hand tracking capabilities in low-light conditions.

To optimize size, weight, and heat management, the Apple Vision Pro forgoes an onboard battery. Instead, a fan is incorporated to cool the processors, and vent holes are strategically placed at the top and bottom of the headset. Despite certain areas on the exterior potentially feeling warm during use, the Light Seal ensures that the device never becomes uncomfortably hot on the user's face.

The presence of multiple cameras on the Apple Vision Pro enables the capture of "spatial" 3D content. Users can view recorded spatial videos in the Photos app directly on the Apple Vision Pro headset. At the launch, there were over 150 3D movies available within the Apple TV app.

Basic 3D video recording capabilities were introduced to the iPhone 15 Pro with iOS 17. This feature allows the iPhone to capture 1080p 30Hz spatial video, which can be played back natively on the headset.

While spatial videos captured on the Apple Vision Pro offer more depth, those recorded on the iPhone appear crisper. Nevertheless, advancements in future hardware are expected to render today's videos comparatively inferior.

Optic ID, privacy, and security

For enhanced security, Apple introduces Optic ID, an iris-scanning system. Similar to the functionality of Face ID or Touch ID, Optic ID serves as a means to authenticate the user, facilitate Apple Pay transactions, and manage other security-related elements.

Iris scanning, Apple's latest biometric innovation, is named Optic ID. Apple clarifies that Optic ID utilizes invisible LED light sources to analyze the iris, comparing it to the enrolled data stored on the Secure Enclave, a process akin to that used for Face ID and Touch ID. Importantly, the Optic ID data is fully encrypted, remains inaccessible to apps, and never leaves the device.

In line with Apple's commitment to privacy, the areas where the user gazes on the display and eye-tracking data are not shared with Apple or external websites. Instead, data from sensors and cameras undergo processing at a system level, allowing apps to deliver spatial experiences without necessarily requiring access to specific user or environmental details.

Apple VR, AR, or MR

Apple has deliberately steered clear of conventional labels such as "Apple VR" for the experiences offered by the Apple Vision Pro. Instead, the company has opted for its own marketing terminology, emphasizing terms like "Spatial Computing" to describe the innovative features and capabilities of its headset.

The classification of the Apple Vision Pro has sparked some debate. While some term it Apple VR due to the headset and displayed content, others prefer Apple AR, given the options for full immersion with the Digital Crown. Even the term Mixed Reality (MR) doesn't precisely capture its essence. Apple's chosen term, Spatial Computing, seems to aptly describe the experience, even if it is a marketing designation.

In essence, while Oculus and PlayStation VR are easily labeled as VR headsets with virtual reality as their primary focus, the Apple Vision Pro treats VR as a feature rather than its sole purpose. In Apple's terminology during keynotes and developer sessions, 2D apps are referred to as "windows," 3D objects as "volumes," and fully immersive VR experiences as "spaces."

visionOS

The software powering the Apple Vision Pro is referred to as visionOS. Apps from iPhone and iPad seamlessly operate on the headset with minimal developer intervention. While compatible apps are displayed in the 3D space, they retain a 2D window format, constraining content within that designated space.

Native apps on the Apple Vision Pro feature 3D effects, and developers have the capability to incorporate 3D objects around a window, termed "ornaments," to enhance user interactions. Menus and controls dynamically appear, either in front of or beside the app, providing an immersive and interactive experience.

visionOS facilitates the operation of apps in a 3D space, allowing developers to pull objects created in the USDZ format into this spatial environment. However, creating unique experiences for visionOS may require specific frameworks. Apps developed with ARKit will appear as 2D windows within visionOS.

While full Apple VR experiences were not the keynote's central focus, they do exist. Apple intends to produce multiple 360-degree videos for users to immerse themselves in, alongside third-party VR apps.

The visionOS SDK was released to developers in late June 2023, featuring a simulator for testing in a controlled environment. However, full on-device testing became feasible only when hardware kits and sessions became available in July.

Due to limited access to hardware for testing, many developers chose not to include their existing iPad apps in the visionOS app store at launch. The challenge lay in uncertainty regarding app usability and potential customer complaints without adequate testing.

At the launch on February 2, 2024, approximately 600 native applications were available. This number is expected to increase rapidly as more developers gain access to the hardware.

Spatial Computing competition

Indeed, despite Apple's recent entry into the Spatial Computing arena, it's not the sole contender in the market. Competitors have been delving into augmented reality (AR) and other extended-reality solutions for more than a decade.

While Google has shifted its focus away from Glass, and Microsoft has progressed beyond Hololens, there are still other alternatives in the market. Xreal Air stands as one of the few products striving to offer AR experiences today, even in the face of the current limitations in consumer-level technology. The landscape of AR and extended reality continues to witness exploration and innovation from various players in the tech industry.

Using Apple Vision Pro (pre-release)

Apple is actively supporting developers in their pre-release app development efforts for the Spatial Computing platform. Developer labs and kits have been provided, enabling some individuals to gain first-hand experience with the headset, extending beyond the initial WWDC announcement and reaching the public.

Initial impressions from those who have interacted with the headset convey a shared sentiment. The Apple Vision Pro, being a first-generation product, enters a competitive landscape. However, it stands out as a unique blend of augmented reality (AR) and virtual reality (VR) with high-end technology, creating an experience that feels seamless and effortless. As the product continues to evolve, its distinct combination of AR and VR capabilities may set it apart in the dynamic landscape of spatial computing.

Users familiar with competing headsets have noted that the Apple Vision Pro offers a broader and taller field of view. The video feed's limitations are apparent in certain lighting conditions, such as extremely bright or dim environments, but these limitations are not overly distracting.

In pre-release demos, the eye tracking and gesture controls have been praised for their excellence. These systems appear well-designed and executed, with fewer of the typical challenges associated with new interaction paradigms.

Dedicated apps work seamlessly, integrating well with the environment, making them a strong option for enterprise developers in areas like job or maintenance training. While Apple suggests that iPad apps can be ported with minimal effort, developers may need to optimize their apps to ensure readability and controls translate effectively to the spatial computing platform.

Weight doesn't seem to pose an issue, though prolonged use may lead to fatigue after over an hour of continuous use. The high-quality displays contribute to a user experience where eye strain is not a prevalent concern.

Apple Vision Pro release date and price

Apple typically follows a trend of announcing products shortly before their release, but with the Apple Vision Pro being a new and innovative platform, Apple allowed for ample time. Developers were provided with approximately a year to work on their visionOS experiences, and access to developer kits was subject to stringent secrecy protocols through a request application.

Initial estimates suggest that the first year's shipments could range between 400,000 and 1 million units. While the high price may present a potential barrier, ambitious supply chain projections hint at 10 million units shipped within three years. The pricing details for the Mauritius market will be revealed shortly, and the product will soon be available for sale on iStore Mauritius.